[MADS] data depth

How would you assign orders to multivariate data? If you have your strategy to achieve this ordering task, I’d like to ask, “is your strategy affine invariant?” meaning that shift and rotation invariant.

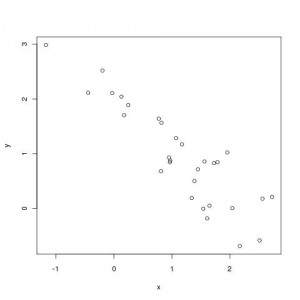

Let’s make the question a bit concrete. What would be the largest point from a scatter plot below? Thirty random bivariate data were generated from bivariate normal distribution (mu=[1,1], sigma=[[1,-.9],[-.9,1]]). If you have applied your rule of assigning ranks to some largest or smallest points, could you continue to apply it to all data points? Once you’ve done it, shift your data w.r.t. the mean (not mu; although I told you mu, data do not tell what true mu is but we can estimate mu and assign uncertainty on that estimate via various methods, beyond computing sample standard deviation or traditional chi-square methods for more complex data and models. Mean, a solution to the chi-square method or a least square method to the hypothesis (model) mu=\bar{X}, is only one estimator among many estimators) and rotate them clock-wise by 90 degrees. Does each point preserve the same rank when you apply your ranking method to the shifted and rotated multivariate data?

If you have applied your rule of assigning ranks to some largest or smallest points, could you continue to apply it to all data points? Once you’ve done it, shift your data w.r.t. the mean (not mu; although I told you mu, data do not tell what true mu is but we can estimate mu and assign uncertainty on that estimate via various methods, beyond computing sample standard deviation or traditional chi-square methods for more complex data and models. Mean, a solution to the chi-square method or a least square method to the hypothesis (model) mu=\bar{X}, is only one estimator among many estimators) and rotate them clock-wise by 90 degrees. Does each point preserve the same rank when you apply your ranking method to the shifted and rotated multivariate data?

First, if you think about coordinate-wise ranking, you’ll find out that two sets of ranks are not matching. Additional questions I like to add are, which axis you would consider first? X or Y? and how to handle ties in one coordinate (since bivariate normal, a continuous distribution, the chance of ties is zero but real data often show ties).

The notion of Data Depth is not employed in astronomy as well as other fields because variables have physical meaning so that multidimensional data cloud is projected onto the most important axis and from which luminosity functions/histograms are drawn to discover a trend, say a clump at a certain color or a certain redshift indicating an event, epoch, or episode in formation history. I wonder if they truly look like cluttered when they are deprojected into the original multi-dimensional space. Remember the curse of dimensionality. Even though the projected data show bumps, in multidimensional space, a hypothesis testing will conclude complete spatial randomness, or there is no clump. Consider transformations and distance metrics. Or how nearest neighbors are defined. Simply, consider mathematical distances of pairs of galaxy for statistical analysis, and these galaxies have location metrics: longitude, latitude, and redshift (3D spatial data points), and scales such as magnitudes, group names, types, shapes, and classification metrics, which cannot be used directly into computing Euclidean distance. Choices of metrics and transformation are different from mathematics and algorithmic standpoints, and from practical and astronomical viewpoints to assign ranks to data. The importance of ordering data is 1. identifying/removing outliers, 2. characterizing data distribution quickly, for example, current contour plots can be accelerated, 3. updating statistics efficiently – only looking into neighbors instead of processing whole data.

The other reason that affine invariant ordering of multivariate data finds no use in astronomy can be attributed to its nonparametric methodology nature. So far, my impression is that nonparametric statistics is a relatively unknown territory to astronomers. Unless a nonparametric statistic is designed for hypothesis testings and interval estimations, this nonparametric method does not give confidence levels/intervals that astronomers always like to report, “how confident is the result of ordering multivariate data or what is the N sigma uncertainty that this data point is the center within plus minus 2?” Nonparametric bootstrap or jackknife are rarely used for computing uncertainties to compensate the fact that a nonparametric statistic only offers a point estimate (best fit). Regardless of the lack of popularity in these robust point estimators from nonparametric statistics, the notion of data depth and nonparametric methods, I believe, would help astronomers to retrieve summary statistics quickly on multidimensional massive and streaming data from future surveys. This is the primary reason to introduce and advertise data depth in this post.

Data depth is a statistic that measures ranks of multivariate data. It assigns a numeric value to a vector (data point) based on its centrality relative to a data cloud. The statistical depth satisfies the following conditions:

- affine invariance

- maximality at the center: data depth-wise median pertains highest rank in univariate data, the datum of maximum value.

- monotonicity

- depth goes zero when euclidean distance of the datum goes to infinity

Nowadays, observation in several bands is more typical than observing in two bands or three, at most. The structures such as bull’s eye or god’s fingers are not visible in 1D projection if location coordinates are considered instead of colors. In order to understand such multidimensional data, as indicated above, this [MADS], data depth might enhance the efficiency of data pipe-lining and computing summary statistics. It’s related to handling multivariate outliers and storing/discarding unnecessary/unscientific data. Data points on the surface of data clouds or tiny parasite like data clumps accompanying the important data clouds are not visible nor identifiable when the whole data set is projected onto one or two dimensions. We can define a train of data summary from data depths matching with traditional means, standard devidations, skewness, and kurtosis measures. If a datum and a tiny data cloud does not belong to that set, we can discard those outliers with statistical reasons and separate them from the main archive/catalog. I’d like to remind you that a star of modest color does not necessary belong to the main sequence, if you use projected 1D histogram onto a color axis, you would not know this star is a pre-main sequence star, a giant star, or a white dwarf. This awareness of seeing a data set in a multidimensional space and adapting suitable statistics of multivariate data analysis is critical when a query is executed on massive and streaming data. Otherwise, one would get biased data due to coordinate wise trimming. Also, data depth based algorithmic efficient methods could save wasting cpu time, storage, and human labor for validation.

As often we see data trimming happens based on univariate projection and N sigma rules. Such trimming tends to remove precious data points. I want to use a strategy to remove parasites, not my long tail. As a vertebrate is the principle axis, tail and big nose is outlier than parasites swimming near your belly. Coordinate wise data trimming with N sigma rules will chop the tail and long nose first, then later they may or may not remove parasites. Without human eye verification, say virtual observers (people using virtual observatories for their scientific researches) are likely to receive data with part of head and tail missing but those parasites are not removed. This analogy is applicable to fields stars to stellar clusters, dwarf galaxies to a main one, binaries to single stars, population II vs. population I stars, etc.

Outliers are not necessarily bad guys. Important discoveries were made by looking into outliers. My point is that identifying outliers with respect to multidimensional data distribution is important instead of projecting data onto the axis of a vertebrate.

In order to know further about data depth, I recommend these three papers, long but crispy and delicious to read.

- Multivariate analysis by data depth: Descriptive statistics, graphics and inference (with discussion)

R.Liu, J. Parelius, and K. Singh (1999) Ann. Stat. vol. 27 pp. 783-858

(It’s a comprehensive survey paper on data depth) - Mathematics and the Picturing of Data

Tukey, J.W. (1974) Proceedings of the International Congress of Mathematicians, 2, 523-531 - The Ordering of Multivariate Data

Barnett, V. (1976) J. R. Stat. Soc. A, 139(3), 318-355

(It answers more than three decades ago how to order multivariate data. - You might want to check publications by R. Serfling (scroll down a bit).

As we have various distance metrics, there are various statistical depths I already mentioned one in my [MADS] Mahalanobis distance Here is a incomplete list of data depths that I heard of:

- mahalanobis depth

- simplicial depth,

- half space depth (Tukey depth)

- Oja depth

- convex hull peeling depth

- projection depth

- majority depth

I do not know the definitions of all depths and how to compute depths based on them (General notions of statistical depth function Serfling and Zuo (2000, Annals of Statistics, 28, pp.461-482) is very useful to get theoretical understanding about statistical data depth). What I do know is that except the Mahalanobis depth, the computational complexity of other depths is relatively simple. The reason for excluding the Mahalanobis depth is that it requires estimating large covariance matrix and inverting it. Therefore, ordering multivariate data with these data depth measures, including assigning medians and quantiles, statistically, that is more robust than coordinate-wise mean or additive errors. Also, removing outliers based on statistical depths pertains more statistical senses but less burdensome for computers and human laborers. Speaking of contouring, having assigned ranks by numeric values on each point, by collecting the multivariate data points of a certain level of ranks will lead to drawing a quick contour.

The story turned out to be longer than I expected, but the one take home message is

Q:How would you assign orders to multivariate data?

A:Statisticians have discussed the notion of data depth to answer the question.

———————————————————————

NOTE: The usages of depth in statistics and astronomy are different. In astronomy, depth is associated with the distance that a telescope can reach. In fact, it’s an euphemism. To be more precise, the depth of a telescope is somewhat defined by how luminously dim away that a telescope cannot catch photons from that source. Deep sky surveys imply that the telescope(s) in the survey projects can see deeper than the previous ones. Or more dim objects than previous telescopes. In statistics, depth is a bit abstract and conceptual based on definition. Data depth is multivariate version of quantile for univariate data on which one can easily order data points from the smallest to largest.

Leave a comment