-Jun 11th, 2009| 03:52 pm | Posted by hlee

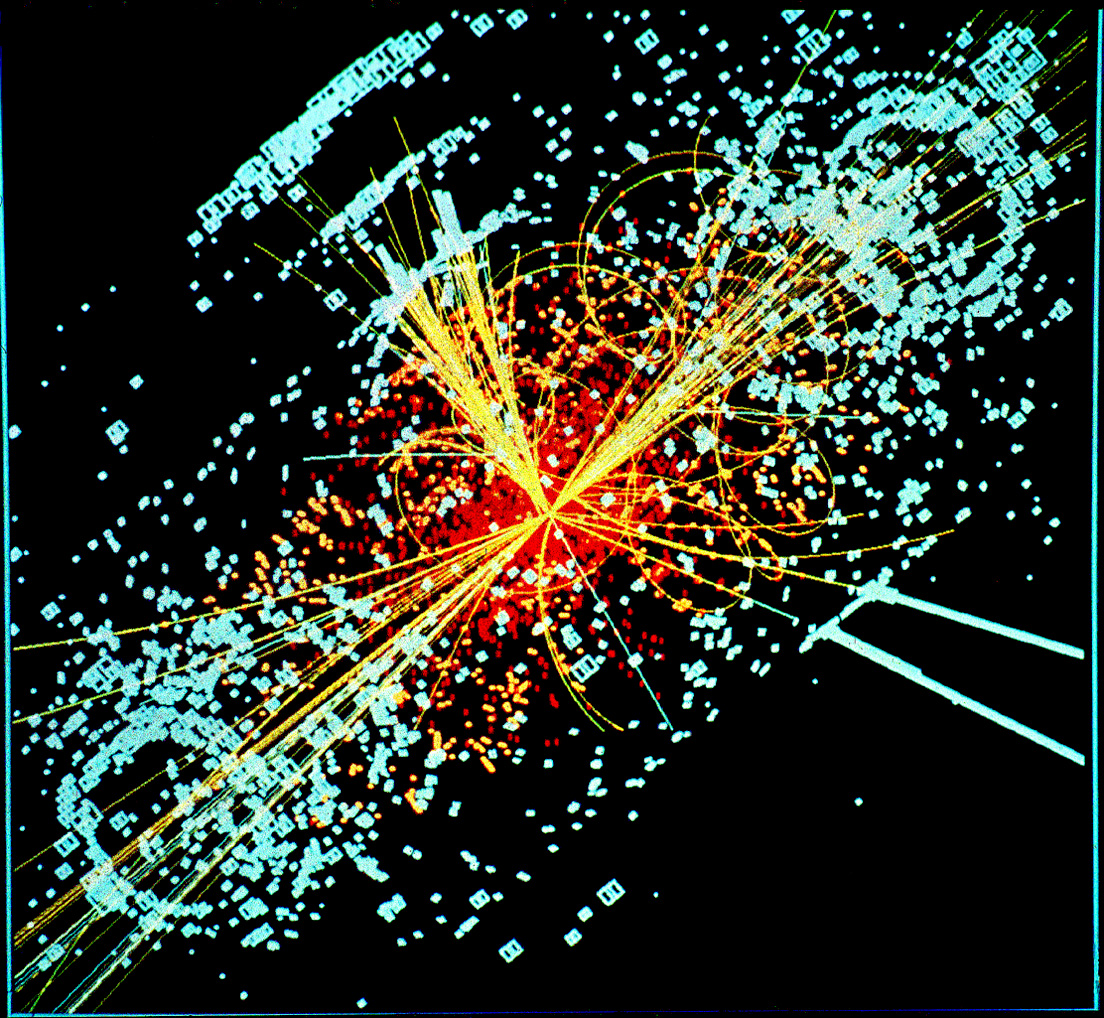

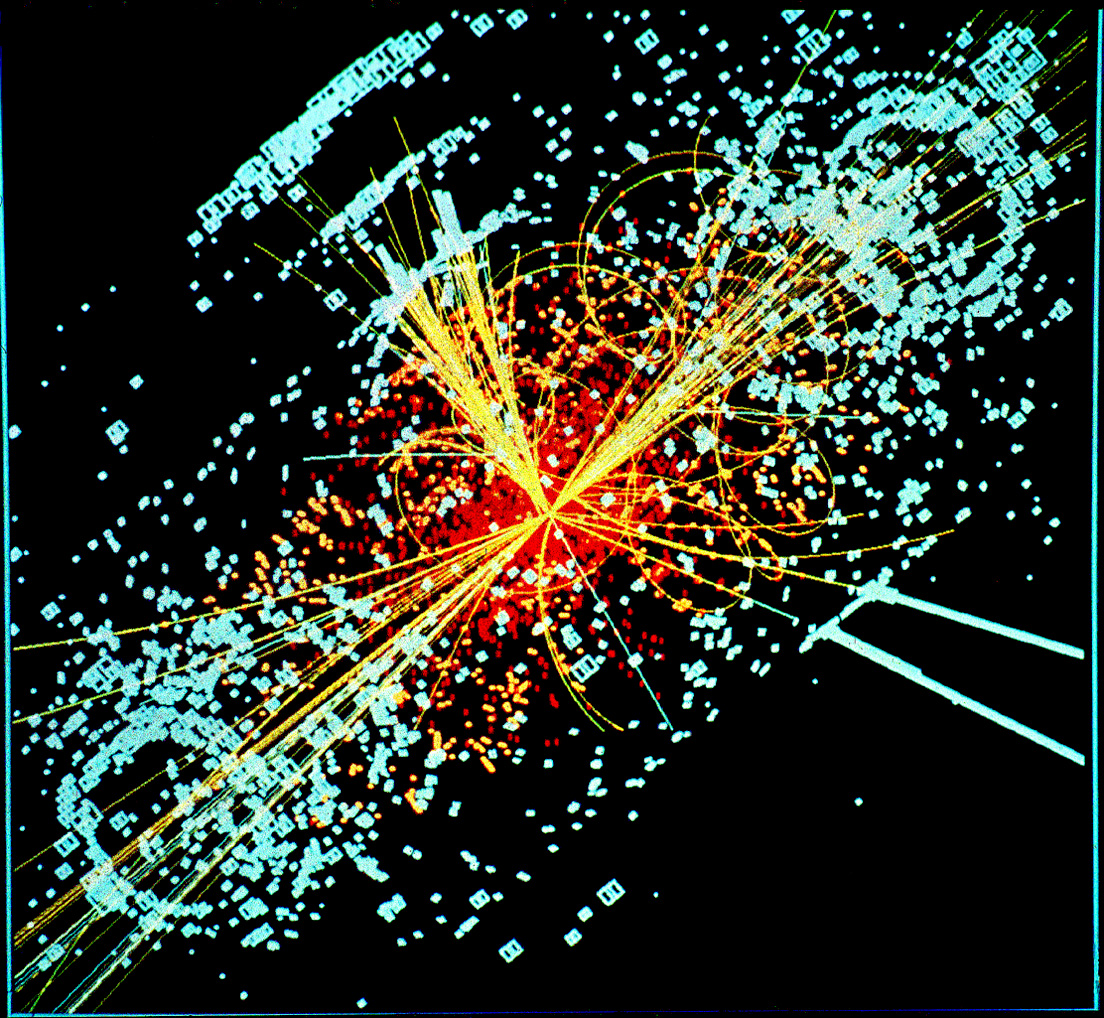

I was at the SUSY 09 public lecture given by a Nobel laureate, Frank Wilczek of QCD (quantum chromodynamics). As far as I know SUSY is the abbreviation of SUperSYmetricity in particle physics. Finding such antimatter(? I’m afraid I read “Angels and Demons” too quickly) will explain the unification theory among electromagnetic, weak, and strong forces and even the gravitation according to the speaker’s graph. I’ll not go into the details of particle physics and the standard model. The reason is too obvious.  Instead, I’d like to show this image from wikipedia and to discuss my related questions.

Instead, I’d like to show this image from wikipedia and to discuss my related questions.

…Continue reading»

…Continue reading»

Tags:

cliche,

collion,

identifiability,

identification,

irony,

LHC,

Power,

reconstruction,

source detection,

subparticle,

supersymmetry,

SUSY,

TRACE,

type I error,

Type II error,

uncertainty principle,

unification,

youtube Category:

Cross-Cultural,

Data Processing,

High-Energy,

Misc,

Quotes,

Uncertainty

-Feb 20th, 2009| 07:48 pm | Posted by hlee

[stat.AP:0811.1663]

Open Statistical Issues in Particle Physics by Louis Lyons

My recollection of meeting Prof. L. Lyons was that he is very kind and listening. I was delighted to see his introductory article about particle physics and its statistical challenges from an [arxiv:stat] email subscription. …Continue reading»

Tags:

chi-square,

chi-square minimization,

coverage,

hypothesis testing,

L.Lyons,

LHC,

LRT,

particle physics,

posterior distribution Category:

arXiv,

Bayesian,

Cross-Cultural,

Data Processing,

Frequentist,

High-Energy,

Methods,

Physics,

Stat

-Sep 10th, 2008| 02:38 am | Posted by hlee

10:00am local time, Sept. 10th, 2008

As the first light from Fermi or GLAST, LHC First Beam is also a big moment for particle physicists. Find more from http://lhc-first-beam.web.cern.ch/lhc-first-beam/Welcome.html. …Continue reading»

-Jul 23rd, 2008| 01:00 pm | Posted by vlk

With the LHC coming on line anon, it is appropriate to highlight the Banff Challenge, which was designed as a way to figure out how to place bounds on the mass of the Higgs boson. The equations that were to be solved are quite general, and are in fact the first attempt that I know of where calibration data are directly and explicitly included in the analysis. …Continue reading»

-Oct 3rd, 2007| 04:08 pm | Posted by aconnors

This is a long comment on October 3, 2007 Quote of the Week, by Andrew Gelman. His “folk theorem” ascribes computational difficulties to problems with one’s model.

My thoughts:

Model , for statisticians, has two meanings. A physicist or astronomer would automatically read this as pertaining to a model of the source, or physics, or sky. It has taken me a long time to be able to see it a little more from a statistics perspective, where it pertains to the full statistical model.

For example, in low-count high-energy physics, there had been a great deal of heated discussion over how to handle “negative confidence intervals”. (See for example PhyStat2003). That is, when using the statistical tools traditional to that community, one had such a large number of trials and such a low expected count rate that a significant number of “confidence intervals” for source intensity were wholly below zero. Further, there were more of these than expected (based on the assumptions in those traditional statistical tools). Statisticians such as David van Dyk pointed out that this was a sign of “model mis-match”. But (in my view) this was not understood at first — it was taken as a description of physics model mismatch. Of course what he (and others) meant was statistical model mismatch. That is, somewhere along the data-processing path, some Gauss-Normal assumptions had been made that were inaccurate for (essentially) low-count Poisson. If one took that into account, the whole “negative confidence interval” problem went away. In recent history, there has been a great deal of coordinated work to correct this and do all intervals properly.

This brings me to my second point. I want to raise a provocative corollary to Gelman’s folk theoreom:

When the “error bars” or “uncertainties” are very hard to calculate, it is usually because of a problem with the model, statistical or otherwise.

One can see this (I claim) in any method that allows one to get a nice “best estimate” or a nice “visualization”, but for which there is no clear procedure (or only an UNUSUALLY long one based on some kind of semi-parametric bootstrapping) for uncertainty estimates. This can be (not always!) a particular pitfall of “ad-hoc” methods, which may at first appear very speedy and/or visually compelling, but then may not have a statistics/probability structure through which to synthesize the significance of the results in an efficient way.

-Sep 15th, 2007| 01:33 am | Posted by hlee

The idea that some useful materials related to the Chandra calibration problem, which CHASC is putting an effort to, could be found from PHYSTAT conferences came along. Owing to the recent advanced technologies adopted by physicists (I haven’t seen any statistical conference offers what I obtained from PHYSTAT-LHC 2007), I had a chance to go through some video files from PHYSTAT-LHC 2007. The files are the recorded lectures and lecture notes. They are available from PHYSTAT-LHC 2007 Program.

…Continue reading»

![]() Instead, I’d like to show this image from wikipedia and to discuss my related questions.

Instead, I’d like to show this image from wikipedia and to discuss my related questions. …Continue reading»

…Continue reading»